Deploying an object storage local server in 5 minutes

Sometimes we need to use object storage, maybe for testing, maybe to perform some demo or to store data. Using Amazon S3 service is an easy solution but it may be an expensive one.

When you need to use S3 storage for testing or learning Amazon is a good option, but maybe it is not the best one. You have to pay but if you need to use it only for testing you probably do not need all the features that Amazon (or other services) provide. In these cases deploying your own Object Storage Server could be a great idea.

We are going to use MinIO. MinIO is a High Performance Storage solution distributed as Open Source Although MinIO allows us to do multiple configurations to solve different use cases we are only focus on perform a simple deployment for testing.

The easiest way is to use the container image:

$ mkdir -p srv/s3-service

$

In this case we will launch the service from our local user, so the directory to store all the data will be created under the user's HOME directory. We will create two more directories:

- srv/s3-service/data, to store the data. The buckets will be created in this directory.

- srv/s3-sevice/certs, to store the certificates to provide SSL access.

$ mkdir srv/s3-service/data

$ mkdir srv/s3-service/certs

$

Now we are going to create a self-signed certificate to provide SSL access. We can use third-party certificates but we need to be certain that:

- The certificate key file has to be named private.key.

- The certificate has to be named public.crt.

- The certificate need to include the SAN field with IP and DNS.

$ cd srv/s3-service/certs

$ cat openssl.conf

[req]

distinguished_name = req_distinguished_name

req_extensions = v3_req

x509_extensions = v3_req

prompt = no

[req_distinguished_name]

C = SP

ST = SA

L = Salamanca

O = ACME

OU = CA

CN = mys3customservice.ddns.net

[v3_req]

subjectAltName = @alt_names

[alt_names]

IP.1 = 83.75.52.134

IP.2 = 10.0.2.100

DNS.1 = mys3customservice.ddns.net

$

Where:

- req_distinguished_name, this information will be used to form the DN. The most important field is CN. In this case the CN field is the FQDN for your service. The service will be reached through the URL https://mys3customservice.ddns.net.

- IP.1, is the IP which resolves your S3 service's FQDN.

- IP.2, this is not mandatory. In my case as I use this server for demos with applications deployed in the Cloud I use a dynamic DNS service to access my home servers so the IP.1 is my router's public IP and IP.2 is the container's IP.

- Use a browser to connect to your S3 service. Some warnings will be displayed if you are using self-signed certificates. When you get the console a warning will be displayed about that there are not correspondence between the FQDN and a 10.x IP. That IP is the IP.2 you are looking for.

- The other way is a bit more complicated.

No we have to create the certificate for the service. We can use a self-signed certificate:

$ openssl req -new -x509 -nodes -days 730 -keyout private.key -out public.crt -config openssl.conf

$

Or if you have an OpenSSL CA configured you can sign the certificate using that CA. First you need to create the CSR:

$ openssl req -new -out public.csr -newkey rsa:4096 -nodes -sha256 -keyout private.key -config openssl.conf

$ ls -lh

total 12K

-rw-r--r--. 1 jadebustos jadebustos 399 May 23 13:01 openssl.conf

-rw-------. 1 jadebustos jadebustos 3.2K May 23 13:01 private.key

-rw-r--r--. 1 jadebustos jadebustos 1.8K May 23 13:01 public.csr

$

The key and the CSR were created so now we have to sign the CSR using our CA:

$ openssl req -new -out public.csr -newkey rsa:4096 -nodes -sha256 -keyout private.key -config openssl.conf

$ ls -lh

total 12K

-rw-r--r--. 1 jadebustos jadebustos 399 May 23 13:01 openssl.conf

-rw-------. 1 jadebustos jadebustos 3.2K May 23 13:01 private.key

-rw-r--r--. 1 jadebustos jadebustos 1.8K May 23 13:01 public.csr

$

After uploading the CSR to our OpenSSL CA:

To check that the certificate was rightly issued:$ openssl x509 -in public.crt -noout -text

...

X509v3 Subject Alternative Name:

IP Address:83.55.50.210, IP Address:10.0.2.100, DNS:mys3customservice.ddns.net

...

$

You should see something similar, with the DNS and the IP fields you placed in the openssl.conf file.

At this point we have the following directory hierarchy:

Where:- s3-service/certs, is the directory where the SSL certificate and the key are kept. The name for these files is important.

- s3-service/data, is the directory where the data will be uploaded.

To start the S3 server those two directories will have to be mapped to the container as a volumes:

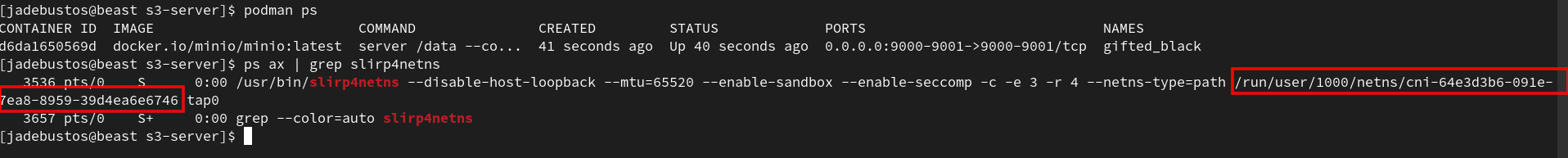

$ podman run --rm -d \

-p 9000:9000 \

-p 9001:9001 \

-v /home/jadebustos/srv/s3-service/certs/private.key:/root/.minio/certs/private.key:Z \

-v /home/jadebustos/srv/s3-service/certs/public.crt:/root/.minio/certs/public.crt:Z \

-v /home/jadebustos/srv/s3-service/data:/data:Z \

minio/minio server /data --console-address ":9001"

Now we can connect to the web console:

After login you can go to Identity -> Service Accounts and create a Service Account to start using the S3 Service: If we go to the srv/s3-service/data we can see that there is hidden directory named .minio.sys so data configuration will be stored within this directory and will be persisted so we do not need to create a service account every time we start the container.

Comments

Post a Comment